System Design - Rate Limiter

Rate limiter is a vital component in large-scale web applications, as it controls traffic to the application servers to ensure a smooth experience for the majority of the users. It has become a critical component due to the rise in DDoS attacks, and it also helps limit computationally intensive operations for users.

Requirements

- Low latency: The rate-limiting component shouldn’t slow down the HTTP response

- Memory footprint should be minimal to accommodate a large number of concurrent requests

- Distributed rate limiting

- Exception Handling: Show proper response message for rate-limited requests

- High Fault tolerance: The requests shouldn’t fail in case the rate-limiting component fails

Draft Design

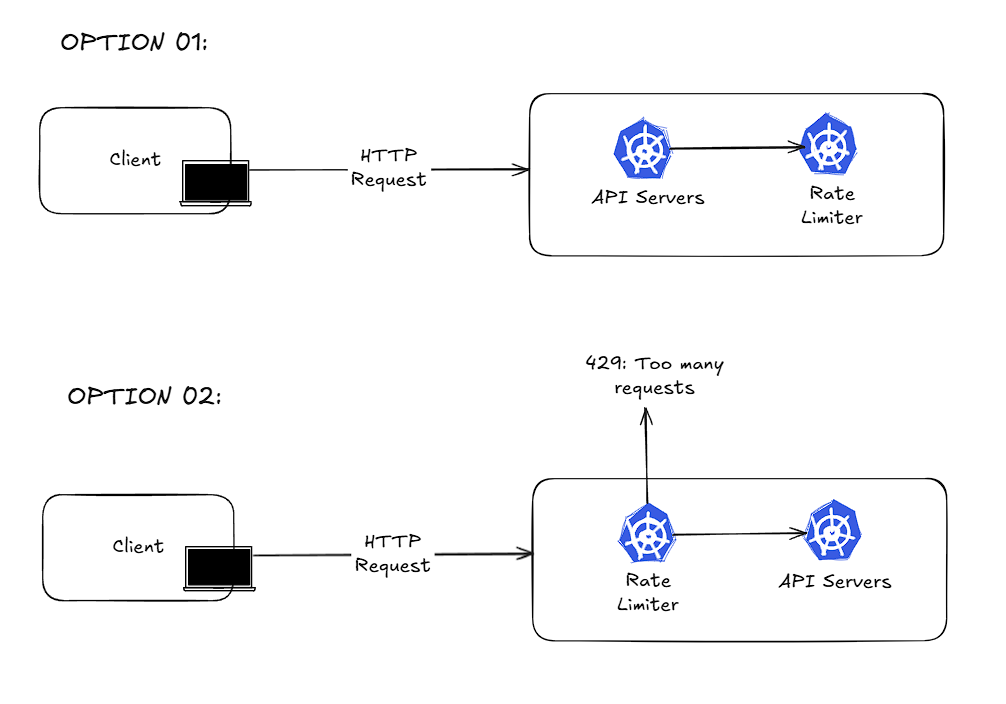

Where to put the rate limiter?

- Client-side: Generally, client requests can be easily tampered with and bypassed, so it’s better not to place an important component like rate-limiting on the client side

- Server-side:

Rate-limiting Algorithm

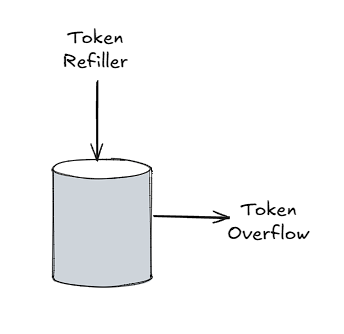

There are several rate-limiting algorithms, such as Token-bucket and leaking bucket. However, here we discuss specifically the token bucket algorithm only, as it’s the most commonly used one.

It involves two parameters:

- Bucket size: A bucket is maintained for each type of request of each user. The bucket size represents the number of maximum tokens allowed in the bucket. Each request consumes a token from the bucket, and if there are no tokens left in the bucket, then the request is rejected.

- Refill rate: number of tokens put into the bucket every unit time.

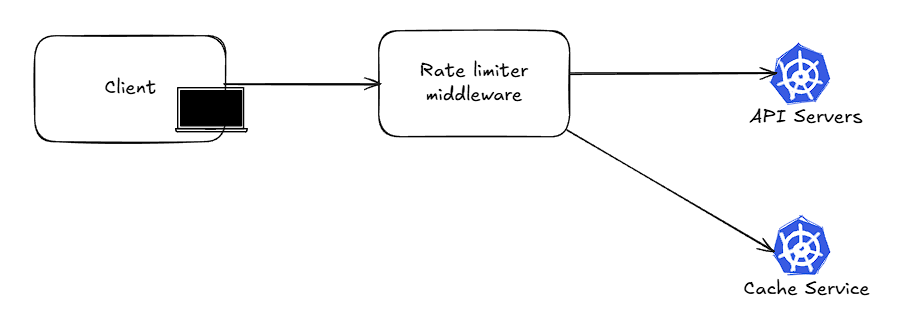

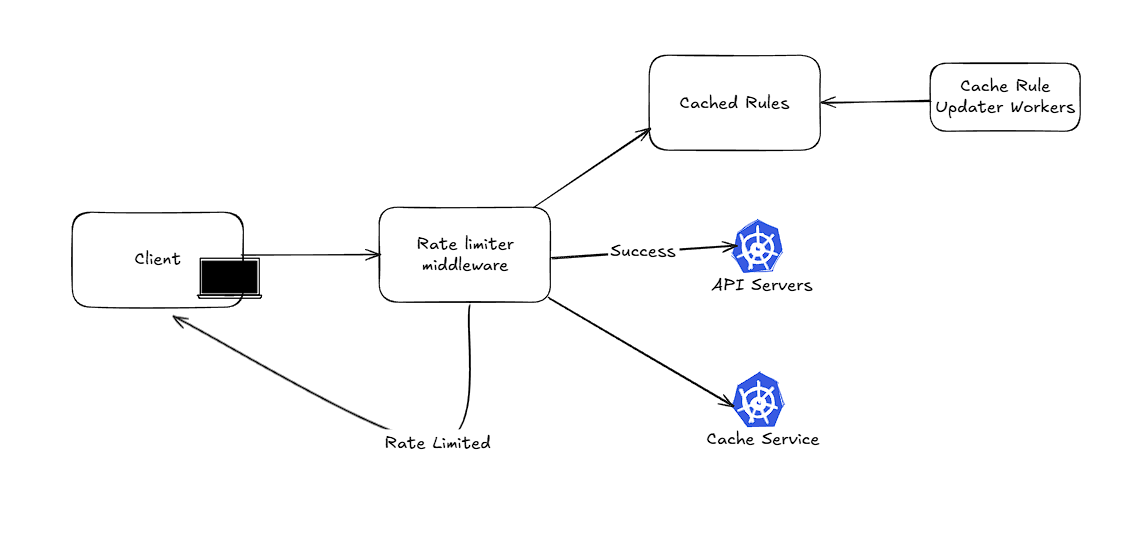

Using a database to store the token bucket-specific parameter isn’t advisable, as it requires disk access. Instead, it would be better to use an in-memory cache.

Detailed Design

Handling Race Condition

Locks on the whole system generally slow down the system. Redis provides an alternative way to achieve atomicity through Lua scripting, where the Lua script is executed atomically on the server side. This is a common way to perform checks on Redis, as the scripts for the token bucket are executed ideally within 0.3 ms.

Synchronization

It’s recommended to use a centralized cache data store; otherwise, synchronization between cache service deployments is a difficult task. Generally, the synchronization is achieved in eventual consistency.

Cloud Service Provider Offerings

All the prominent cloud service providers offer cache service. AWS offers ElastiCache, which internally uses Redis, and Azure also provides a similar offering through Azure Cache. GCP offers Memorystore service for both Memcached and Redis.

References

- Should I rate-limit packets with iptables?

- Rate Limit Requests with Iptables

- Scaling your API with rate limiters

- Scaling your API with rate limiters

- Better Rate Limiting With Redis Sorted Sets

- How we built rate limiting capable of scaling to millions of domains

- Lua scripting in Redis for performance gain

- Rate Limiting using Redis + Golang

- Atomicity with Lua

- Ratelimit code opensourced by Lyft

- Announcing Ratelimit : Go/gRPC service for generic rate limiting

- Amazon ElastiCache